Graph Data Structure Demystified

We use Google search, google maps, and social networks regularly nowadays. One of the things they all have in common is the fact they use

We use Google search, google maps, and social networks regularly nowadays. One of the things they all have in common is the fact they use

Whether we know it or not, trees are everywhere: our computer file systems, HTML DOM, XML/Markup parsers, PDF format, computer chess games, compilers, and others

Outline Binary search trees BST Implementation Binary Heaps Trie Binary Search Tree A binary search tree is a binary tree with a unique feature – all nodes to the

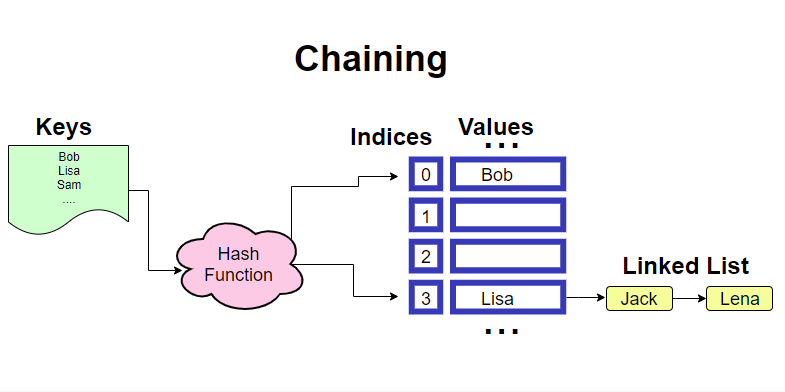

Hash table is a data structure designed for quick look ups and insertions. On average, its complexity is O(1) or constant time. The main component

Get personalized expert advice within two hours.