Why Blockchain Is Too Big To Ignore Or Build A Blockchain With JavaScript – Part 2

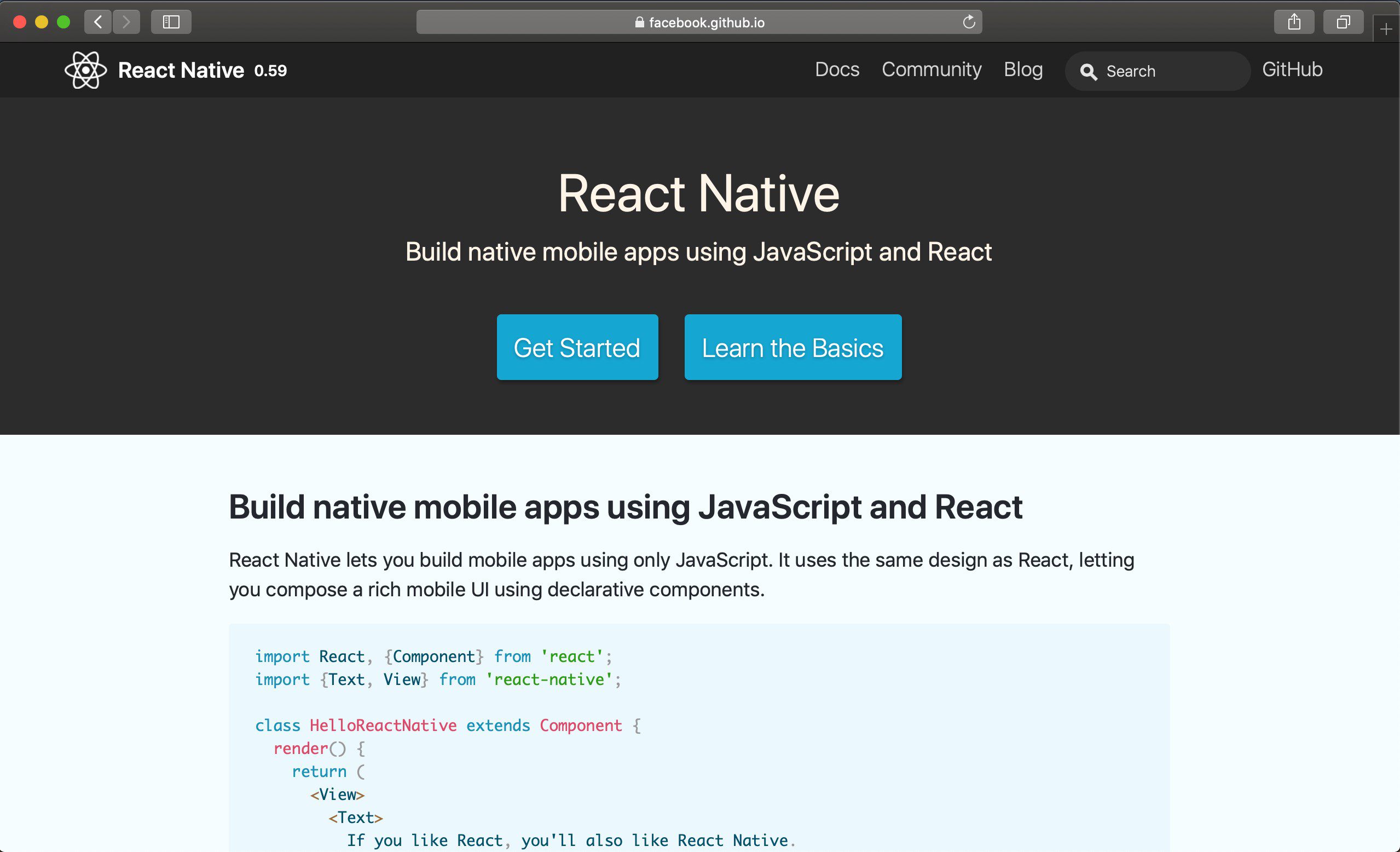

Prerequisites: Basic knowledge of JavaScript Outline Intro Block class USDevBlockchain Mining Transactions and rewards Transaction signature Intro In the first part of the blog, we have introduced the notion of blockchain and covered the basic concepts. You could dig a lot deeper if you want, but that is the minimum knowledge we need to move on to the next step