Costs for building a mobile app in 2025 are changing fast, and what worked last year might not work now. Some apps cost a few thousand dollars, while others run into the millions. But why? What makes the difference? Is it the features, the team, or something else entirely? Before you invest, you need the full picture. Let’s break it down—no fluff, no vague answers, just real numbers and what they mean for your budget.

Small Budget or Big Investment What It Takes to Build Your Dream App

Building a mobile app in 2025 is all about making the right choices. A small budget can get you started, but will it meet your goals? A bigger investment brings top developers, strong security, and seamless performance. The difference is clear. Cheap apps often need expensive fixes later. Premium development gets it right the first time. Every dollar spent should add value.

How Much Does Mobile App Development Cost at Each Stage?

Mobile app development in 2025 involves multiple stages. Each stage adds to the total cost. Planning ahead helps control expenses. Let’s break down the costs at every step.

Planning & Research

This stage sets the foundation. Developers analyze market trends and target users. Business goals and budgets are defined. Research helps avoid costly mistakes. Simple research costs $5,000. Detailed analysis with expert consultations can go up to $15,000. Competitor analysis and feasibility studies are included. A strong plan saves money later. It ensures smooth development. Skipping this stage can lead to failures. Investing in research prevents unexpected expenses.

Design & Prototyping

This step focuses on visuals and user experience. Professional UI/UX Designers create wireframes and app layouts. A prototype is built to test ideas. Basic designs cost around $10,000. More advanced UI/UX work can reach $50,000. Interactive prototypes help refine the user journey. Changes at this stage save development costs. A well-designed app improves engagement. Poor design leads to low user retention. Investing in design boosts usability. Quality design makes an app stand out.

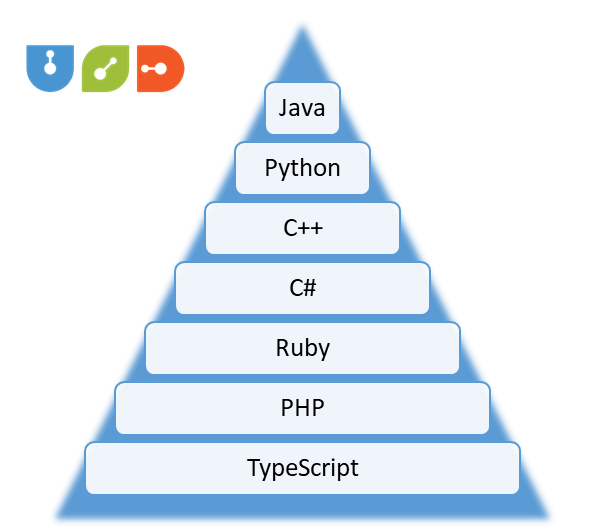

Development & Coding

This is the most expensive stage. Developers write code and build app features. Simple apps cost $40,000 to $100,000. Medium-complexity apps range from $100,000 to $300,000. Advanced apps exceed $500,000. Costs depend on the platform and features. iOS and Android apps require separate coding. Backend development adds to expenses. Using third-party APIs increases costs. Skilled developers charge higher rates. Efficient coding speeds up the process. Poor coding leads to expensive fixes later.

Testing & Debugging

Testing ensures the app runs smoothly. Bugs and performance issues are fixed. Basic testing starts at $5,000. Advanced testing with automation costs $30,000 or more. Security testing is crucial. Poor testing leads to crashes and bad reviews. Fixing bugs early saves money. Load testing checks app performance under high traffic. Usability testing improves user experience. Testing should be done before launch. Skipping testing increases risks. A well-tested app gains user trust.

Deployment & Maintenance

Launching the app requires app store approvals. Submission fees are around $99 for iOS and $25 for Android. Marketing and server costs add up. Initial deployment costs $10,000 to $50,000. Ongoing maintenance is essential. Monthly updates cost $5,000 to $20,000. Fixing bugs and adding features increases expenses. Cloud storage and security updates cost more. Ignoring maintenance shortens an app’s lifespan. Regular updates keep users engaged. Well-maintained apps stay competitive.

What You’re Really Paying for in High-End App Development

High-end app development is all about quality, performance, and long-term success. A premium app doesn’t cut corners. It’s built for speed, security, and growth. Every dollar spent goes into expert teams, advanced technology, and seamless user experience. The right investment means fewer problems, better scalability, and higher returns. Let’s break down what makes premium apps worth every penny.

Advanced Technology and Innovation

High-end apps use the latest technology to stay ahead. Faster processing, AI-driven features, and cloud integration make a real difference. These innovations improve user experience and ensure smooth performance. Cutting-edge frameworks reduce bugs and speed up development. Investing in advanced tools means your app won’t feel outdated in a year. A premium app is future-proof. That’s why top companies prioritize innovation over shortcuts.

Custom Architecture for Scalability

A strong app needs a solid foundation. Custom architecture ensures your app can grow with your business. A well-structured backend prevents crashes when traffic increases. Scalable solutions save money in the long run by avoiding costly rebuilds. Every element is designed to handle expansion without losing performance. High-end apps are built to handle tomorrow’s demands. That’s the difference between generic and premium development.

Expert Development and Design Teams

Building a great app requires a skilled team. Experienced developers write clean, efficient code that runs smoothly. UI/UX designers create sleek, user-friendly interfaces that keep people engaged. Quality assurance teams test every detail to prevent glitches. A high-end app is crafted by professionals who understand what works. Investing in experts means fewer headaches and a better final product. That’s what separates top-tier apps from the rest.

Enterprise-Grade Security and Compliance

Security isn’t optional in enterprise app development. Top developers use encryption, secure APIs, and rules to keep user data safe. Poor security can cause data leaks, legal trouble, and loss of trust. Good apps follow strict rules to meet industry standards. They also get updates to stop new threats. A safe app helps keep a good reputation. That’s why security comes first, not last.

Continuous Optimization and Dedicated Support

A great app doesn’t stop improving after launch. High-end development includes ongoing updates, performance monitoring, and technical support. Regular optimizations keep the app fast, responsive, and bug-free. Dedicated support teams fix issues before they become major problems. Investing in premium development means long-term success, not just a quick launch. A well-maintained app stays competitive and keeps users happy. That’s why continuous improvement is key to staying ahead.

Let’s compare the development costs for iOS and Android in 2025 to determine which one is more expensive.

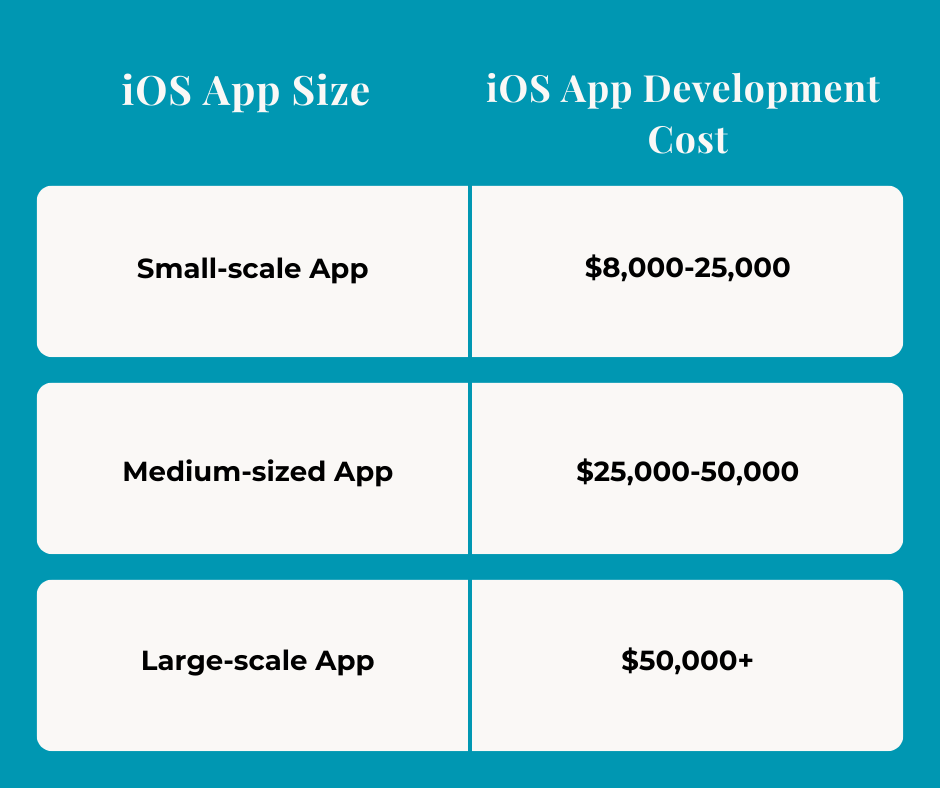

iOS Development Costs

Developing an iOS app can cost different amounts. A basic app may start at $5,000. More advanced apps can cost over $700,000. The total cost depends on the app’s complexity, and required features of the app. The costs of apps also depend on the location of the development team.

Android Development Costs

Android app costs also vary. Simple apps may cost between $5,000 and $15,000. Apps with more features range from $15,000 to $25,000. Highly advanced apps can start at $25,000 and go beyond $30,000.

In 2025, both android and iOS app development costs depend on complexity and features. Basic apps cost about the same on both platforms. However, complex iOS apps usually cost more than Android apps. This happens because of platform-specific requirements and design needs.

In-House Developers vs Agencies vs Freelancers Which One Delivers the Best Value for Your Investment

|

Feature |

In-House | Agencies | Freelancers |

| Cost | High | Medium/High | Low/Medium |

| Expertise | Focused | Broad | Variable |

| Control | Maximum | Medium | Variable |

| Scalability | Low | High | High |

| Management | Internal | Included | Client |

| Commitment | Long-term | Project | Short-term |

| Speed | variable | fast | variable |

| Best For | Core projects | Complex projects | Specific tasks |

| Risk | High employee risk | agency fit risk |

High reliability risk |

How to Estimate App Development Cost?

App development costs depend on features, platforms, and complexity. Mobile app 2025 projects require careful budgeting. Planning costs upfront helps avoid surprises. Let’s break down the key cost factors.

Project Scope and Complexity

Simple apps cost $40,000 to $100,000. Medium-complexity apps range from $100,000 to $300,000. Large-scale apps with advanced features exceed $500,000. More features mean higher costs. AI, AR, and blockchain increase expenses. Custom integrations cost extra. Native apps for iOS and Android cost more than hybrid apps. Understanding project needs helps control spending. Cutting unnecessary features saves money. Prioritizing core functions keeps development within budget.

Development Team and Location

Costs vary by developer expertise and location. US-based developers charge $100–$250 per hour. Eastern European developers charge $50–$100 per hour. Asian developers cost $20–$80 per hour. Hiring freelancers is cheaper but riskier. Agencies provide reliability but cost more. In-house teams need salaries, tools, and office space. Outsourcing reduces costs but requires careful management. Choosing the right team affects the final budget.

Technology and Third-Party Services

Backend development, APIs, and cloud services add costs. AWS, Google Cloud, and Firebase have monthly fees. Third-party APIs for payments, maps, and notifications cost extra. Subscription-based tools increase expenses over time. Basic cloud hosting starts at $500 per month. Large apps may need $5,000 monthly hosting. Security features add to the budget. Choosing cost-effective technologies helps manage expenses.

Maintenance and Updates

Development costs don’t stop after launch. Regular updates keep apps running smoothly. Bug fixes and security patches cost $5,000–$20,000 yearly. Adding new features costs extra. Server maintenance costs grow with user traffic. Ignoring updates leads to crashes and bad reviews. Investing in maintenance ensures long-term success. Budgeting for post-launch costs prevents future financial stress.

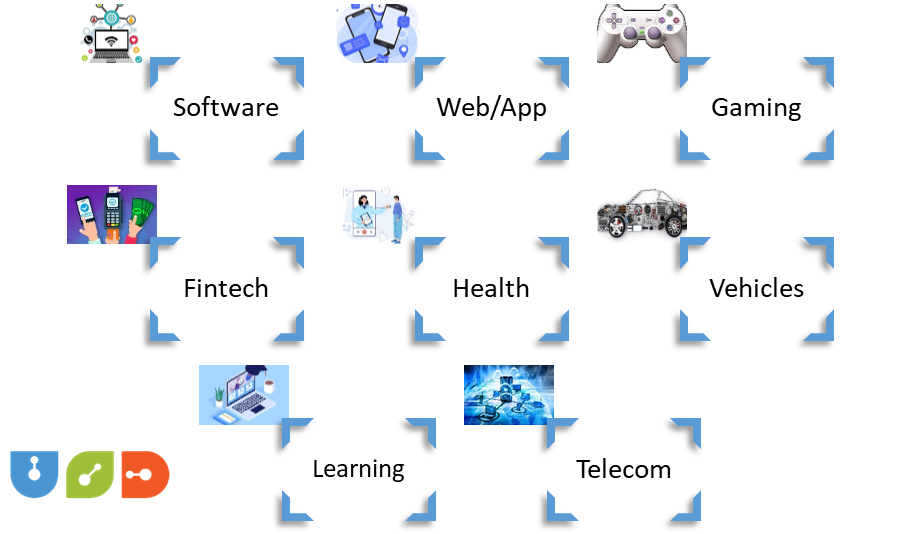

Why Leading Companies Invest Millions in Custom Mobile Apps 2025

Top companies spend millions on custom apps to stay ahead. A custom app improves customer experience, boosts sales, and strengthens brand loyalty. Mobile app 2025 trends show rising demand for personalized solutions. Ready-made apps limit growth, but custom ones provide flexibility. A custom app costs $100,000 to over $500,000. This totally depends on features. High security and advanced analytics add value. Companies! invest now in a mobile application development company to secure long-term profits.

Conclusion

Investing in custom mobile app development is a smart choice. It increases user engagement and boosts revenue. In 2025, businesses focus on unique digital solutions. Custom apps offer better security, flexibility, and speed. The initial cost is high, but the benefits last long. A quality app helps businesses grow and stay ahead. It improves customer loyalty and ensures success.

Pros

Pros